Artificial intelligence (AI) has made impressive strides in recent years, and one of the most intriguing applications of this technology is the ability to read people’s minds. Researchers are using advanced techniques such as functional magnetic resonance imaging (fMRI) to decode neural activity in the brain and reconstruct the images people see. This is an exciting development, as it offers a unique way to understand how the brain represents the world and the connection between computer vision models and our visual system.

One major challenge in this field is the reconstruction of realistic images with high semantic fidelity. While deep generative models have been employed for this task, the image reconstruction quality for real-world images remains a complex problem. One of the main difficulties is the variability of natural images, which require accurate reconstruction of color, shape, and higher-level perceptual features.

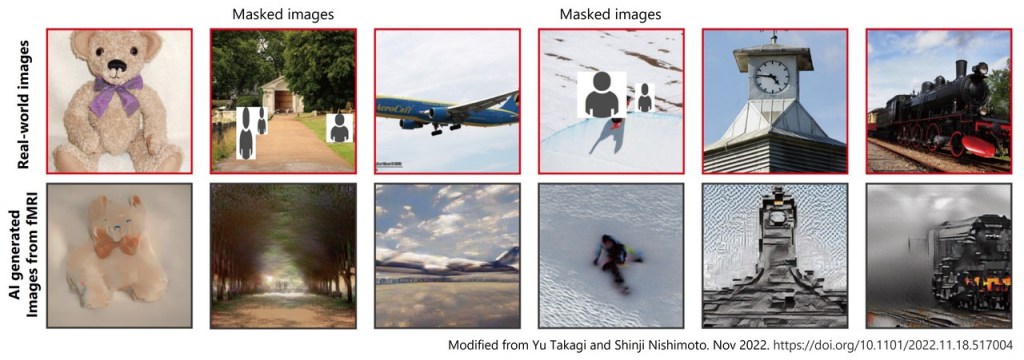

However, recent research has made significant progress in this area. For example, a study used a diffusion model to reconstruct real-world images from fMRI data, which reduces the computational cost of deep models while preserving high generative performance. This AI model even predicted objects hidden behind masks, similar to how our brain automatically processes this information (see the image below). Another study applied a variational auto-encoder neural network using a generative adversarial network unsupervised procedure to reconstruct face images from fMRI data. The system could perform robust pairwise decoding, accurate gender classification, and even decode which face was imagined rather than seen.

Despite these advancements, AI-based visual reconstruction still needs to address many challenges. The lack of a standardized evaluation procedure for assessing the reconstruction quality makes it difficult to compare existing methods. Additionally, the brain’s visual representations are invariant to different objects or image details, meaning brain activation patterns are not necessarily unique for a given stimulus object.

In conclusion, while AI may not yet be able to read your mind, researchers are making great strides in understanding how the brain processes visual information and reconstructing the images people see. This could have important implications for brain-computer interfaces and our understanding of the brain’s visual processing mechanisms.

Who knows what new advances in AI and neuroscience may bring next?